Unreal Engine 5: Nanite: What is it, and why does it matter?

Nanite, explained for people who aren't huge computer nerds

Unreal Engine 5 became available to download recently, and if you follow game development people on Twitter, you’ve probably seen some people raving about it - and for good reason! Nanite allows for some pretty amazing stuff, but if you don’t know what that stuff is, or why it’s amazing, you’ve come to the right place.

But first, we have to back up a little bit.

What’s a mesh?

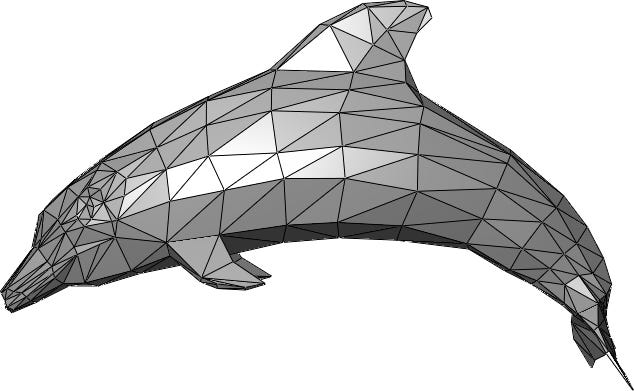

A polygon mesh is a way of creating 3D shapes out of polygons, or “polys” for short. A polygon is a type of 2D shape, and in polygon meshes, the shape used is almost always a triangle (so “polys” and “tris” are often used interchangeably).

Pretty much every 3D object in a video game is a mesh. And similar to how a photo with a lot of detail is “high resolution”, a mesh with more details needs more polygons, so is “high-poly”.

In the above image, we can see an example of a low-poly mesh dolphin, with a “wireframe” view which shows us the edges between all the polys. It’s a good mesh, but it’s low-poly, so it has some obvious sharp angles - a limitation of polygon meshes is that polygons can’t have round edges, and real life has a lot of round stuff.

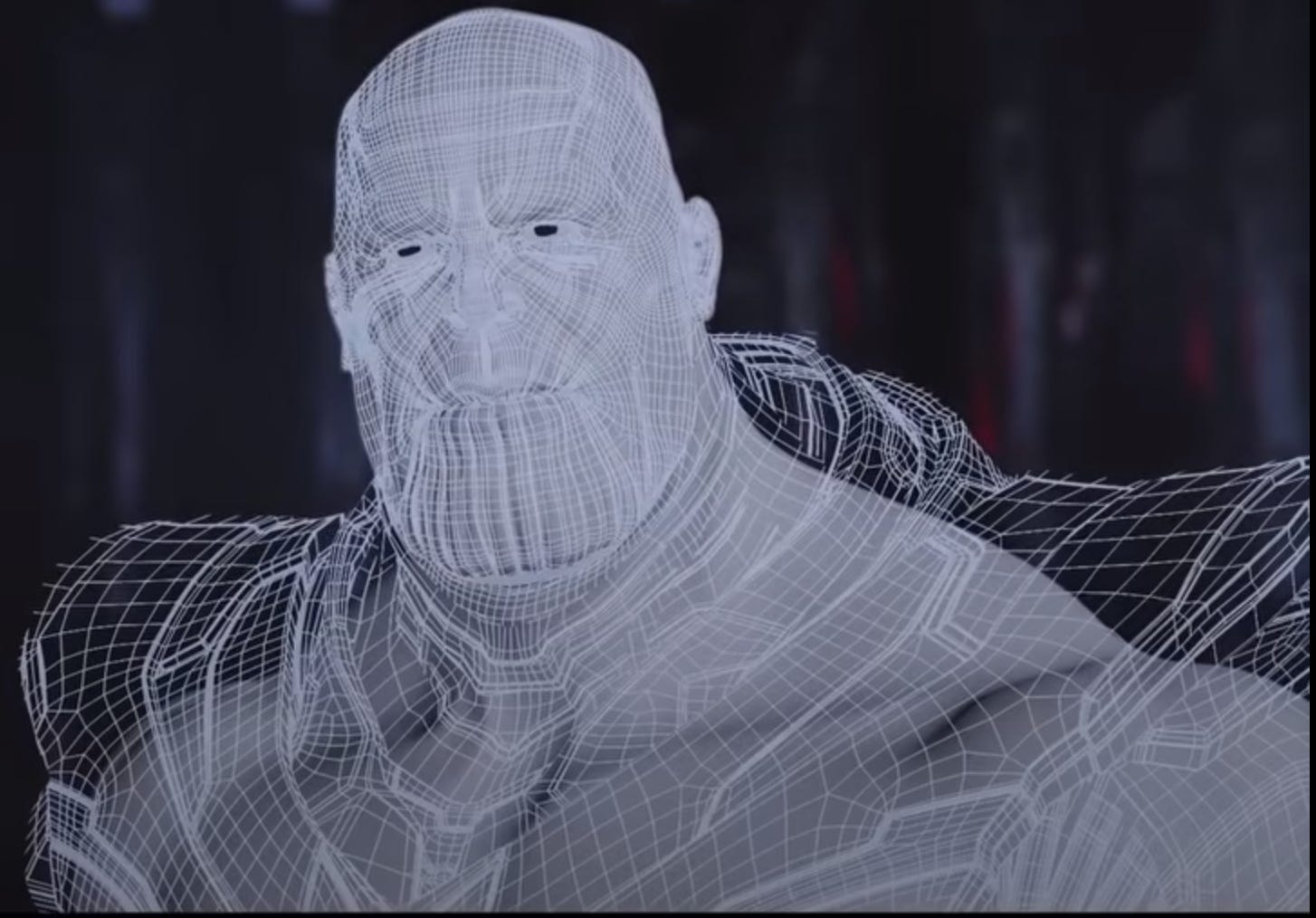

The workaround to this limitation is just to use very high-poly meshes. Check out a wireframe of Thanos, from Avengers: Endgame:

As you can see from the wireframe, that’s a lot of polys, especially on his face!* That’s the amount of detail it takes to get a face to look real in a close-up, and animate naturally. And there’s a lot of other things in a virtual environment that need that level of detail to achieve the photorealistic look that movie CGI has these days**. Unfortunately, that level of mesh detail hasn’t been brought to video games.

When making a movie, if you’re rendering (generating the video you can watch), each frame can take minutes or even hours to render***, but it doesn’t matter, because you string the frames together at 24 frames per second (fps) after they’re all rendered, and you have a movie.

When making a video game, rendering need to happen in “real-time” so the game can react to player input, and the slowest framerate games are expected to run at is 30fps, which at most gives 33.333 milliseconds to render each frame. Movies are also rendered on some of the most high-end computers in the world, which a PS4 or Xbox One definitely is not - no hate, those things are just made to be affordable, and they’re over 7 years old now.

High poly meshes also take up more data, which means if games had them, games would be bigger, taking longer to install, and games already take long enough as is.

Video games have to do a lot in just milliseconds, on sub-optimal hardware, so high-poly meshes that take up render time have to go.

Or do they?

*It's not completely clear, but it's likely the mesh of Thanos used in the movie is even more high poly than the one shown

**Anything jagged, like rocks, can also require a lot of polys. Basically polygon meshes are really good for stuff that’s mostly flat, which doesn’t cover a lot.

***High-poly meshes aren’t the only thing (and are probably the least significant thing) pushing up frame times on movies, but the point is that movies have maximal frame time and memory budgets, while video games have minimal ones.

Games do have pretty detailed meshes though (moreso than that dolphin, by a lot!), so how does that work?

So, games can’t have a ton of polys on-screen at once. But what if you could ensure that the only polys on-screen are the ones that are actually visible? When you’re looking at a high-poly object from a distance, you can’t see all that detail after all, and some details are so small, you can only see them really close up.

Games already have a solution to this, which you’ve probably seen in action if you’ve played pretty much any open-world game. The existing solution uses discrete Level of Detail (LoD) - basically, for every object, there’s multiple meshes, and each mesh has a different level of detail (a different number of polys). As you get closer to the object, the low LoD mesh gets replaced with a higher LoD mesh, until you get to the highest detail.

This approach produces massive performance and visual gains compared to having no LoD system, but swapping out entire meshes is pretty heavy-handed, which can lead to a couple problems. The first is that it can be tricky to manage loading meshes in and out, especially in a way that’s seamless - if you’ve ever experienced “pop-in”, where objects just suddenly appear or switch LoD right in front of you, you’ll know what I’m talking about, and even when it happens at a distance, it can be pretty noticeable.

The second is that the heavy-handedness means that the amount of polys on-screen won’t always be perfectly efficiently distributed - there’ll always be some objects that should be on higher LoDs, and others that should be on lower LoDs, so you have to carefully and manually tune the amount of polys in each LoD, and at what distance each LoD is used at to balance visuals and performance.

And of course, if you’re making a game with discrete LoD, then you have to go to the effort of making multiple meshes for every object, which can add a lot of extra work.

The holy grail of LoD systems is continuous LoD, which is where you just use one high-detail mesh, and then some code will automatically remove or add detail to that mesh depending on how far or close you are to the mesh, continuously changing the detail of meshes as the player moves. This would solve both problems with discrete LoD; meshes would never pop-in, because they would change gradually instead of at set distances, and meshes would never be too high or low poly, because the code that does the continuous LoD could make sure of that.

The drawback of continuous LoD is that it needs a pretty decent GPU (Graphical Processing Unit) to run on, so it hasn’t been possible for mainstream gaming - as I mentioned earlier, the PS4 and Xbox One are pretty weak machines these days. Good thing new consoles just came out - the PS5 and Xbox Series X are both much beefier than their predecessors, GPU included.

Nanite Does Continuous LoD

(Thanks to Emilio López for letting me use his video. I’d recommend checking out his post, A Macro View Of Nanite (where I stole the video from), for a more technical overview of Nanite)

In the video above, you can see Nanite in action. In the first view, where clusters* of polys are assigned random colours, you can see that as the camera pans out, the clusters get bigger, and the object gets less detailed, and then the object becomes more detailed as the camera pans back in. Next, the camera does the same action, but with a normal view, so you can see how it would look during gameplay.. and it’s seamless. The increased power of the new consoles (which can handle a lot more polys on screen), with Nanite efficiently managing the number of polys on-screen at once, means having movie-detail meshes in future games is a possibility.

And, the issue of high-poly meshes increasing a game’s install size aren’t a big issue with Nanite - it can store meshes in a way that’s highly efficient.

What Nanite enables is pretty amazing, but it does come with some caveats.

First, is that a Nanite mesh cannot be deformed in any way - there’s a type of animation, “skeletal animation”, that most games and movies use for its characters (among other things), and the way skeletal animation works is by moving around and deforming the polygons of a mesh. That’s off the table with Nanite, though I wouldn’t be surprised if Epic are working on adding this feature down the line.

Secondly, the art workflow for high-poly meshes is still kind of unclear - it’ll probably get figured out, but if it’s awkward to actually produce high-poly 3D art for the time being, it might take awhile for games with high-poly meshes to start popping up. Though it’s worth noting, in the AAA space in particular, high-poly meshes are already being created, with the actual in-game meshes being based on those high-poly meshes. The potential for those original high-poly meshes to go straight into games could save a lot of work down the line.

The next thing is that as I mentioned earlier, continuous LoD (which is what Nanite does) takes up a fair amount of GPU processing power on its own, and the GPU is needed for a lot of other stuff in games, so if you aren’t using meshes that are high-poly enough to cause some slowdown, then I don’t know that Nanite would be worth the overhead. (That said, once you’re using Nanite, you can add a bunch more high-poly stuff and the impact to performance stays fairly negligible)

The fact that Nanite needs the GPU so much means I doubt we’ll see many games on PS4/Xbox One using Nanite, especially as even though it’s designed to keep the number of polys on-screen pretty low (relative to how high-poly the meshes are), it’s still designed to put a lot of polys on-screen, and on top of that, it could be a little pointless to have high-poly meshes without a nice lighting system to take advantage, but that would take even more GPU power the last-gen consoles just lack.

(For more info on how to use Nanite, and other potential pitfalls, check out the official docs. I didn’t include all the pitfalls they mention, since the docs are more about “here’s how to use the thing” than evaluating the value of the technology)

That said, Nanite can still run well on old-ish hardware. Here’s a glorious shitpost of a test, running Nanite flawlessly on a GTX 1060 (a 5 year old GPU that’s near the bottom of the 10-series range, which is now 2 generations old), turning 15FPS into 60:

Obviously in that video, nothing else is really going on aside from Nanite doing its thing, but in my opinion, the fact it works so well is pretty promising.

*To work its magic, Nanite puts polys into clusters. For info on how Nanite’s magic works, I’d recommend Brief Analysis of Nanite by @ldl19691031

Conclusion

In conclusion, Nanite is pretty darn cool, and with Lumen as well, I think UE5 hails the real beginning of next-gen game development. The fact that it enables such high-poly meshes could open up other doors people haven’t even thought much about too - photogrammetry (generating meshes of real objects from photographs), can’t really be used in gamedev because of the super high-poly meshes it produces, but Nanite could make it possible to throw them into a game pretty easily.

But it’s not magic, not really, and so I’m sure there are limitations of Nanite that I haven’t realised yet, and surely those limitations will be discovered as people actually start trying to make games with it. But as it stands, things are looking pretty promising.

Here’s a bonus shitpost, but this time, it’s cursed: